Nati's Blog

Docker From Containers to Cloud-Native Applications

Introduction

Software development once faced a persistent challenge: the infamous "works on my machine" problem. Developers struggled with inconsistent environments as applications that ran perfectly in development would mysteriously fail in production. This dependency nightmare plagued teams for years until Docker emerged in 2013, revolutionizing how we package and deploy applications.

This article explores Docker's history, the technical foundations that make it work, and how it fits into today's cloud-native ecosystem.

Evolution of Infrastructure: From Bare Metal to Containers

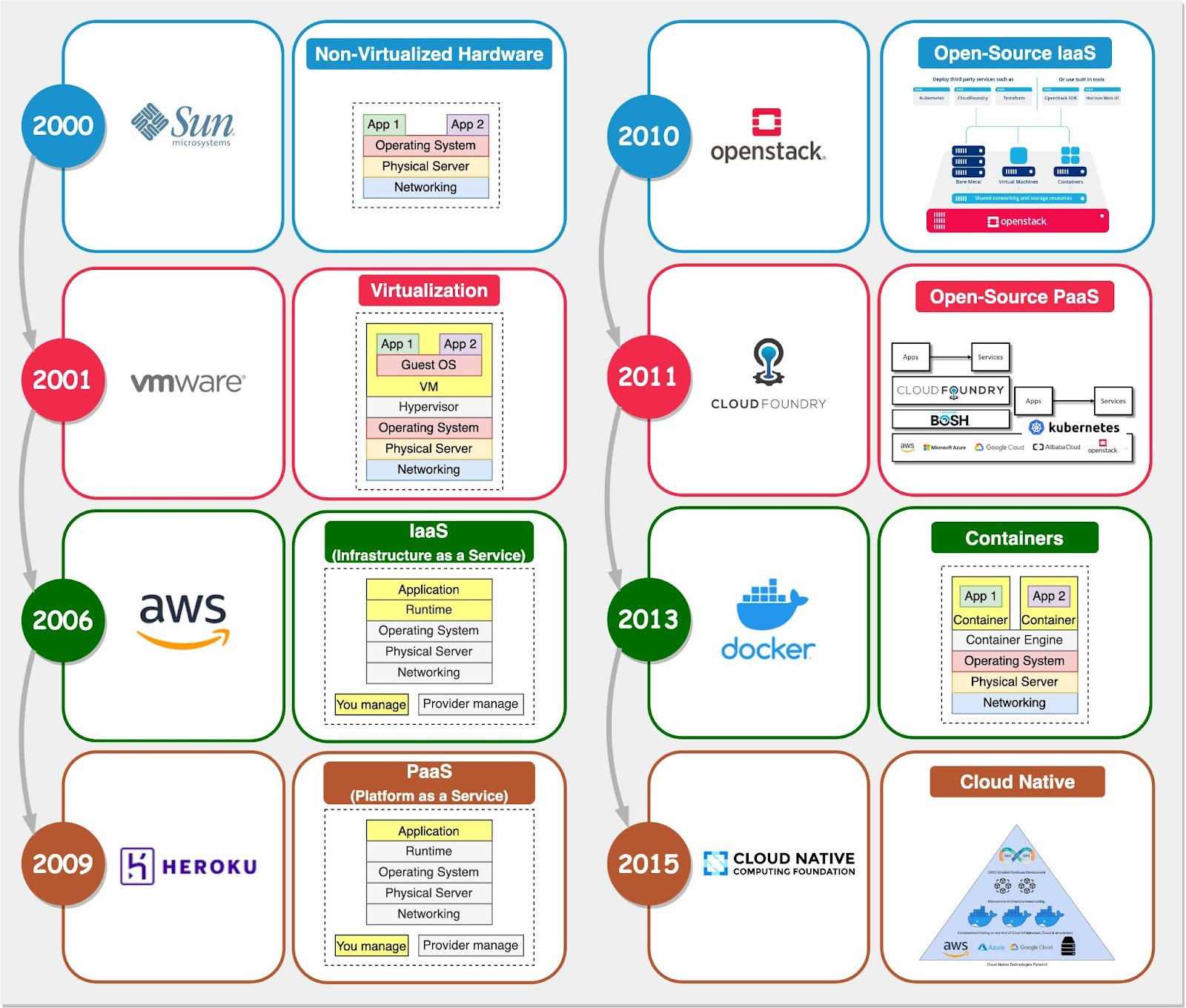

The journey to Docker can be understood through four major infrastructure phases:

1. Bare Metal Era (Pre-2000s)

In this period, applications ran directly on physical servers. Each new application often required:

- Purchasing new hardware

- Physical installation and configuration

- Manual setup of the operating system and dependencies

Example challenge: Adding a new service could take weeks or months just for hardware procurement.

2. Hardware Virtualization (2000s)

Virtual machines (VMs) allowed multiple isolated environments on a single physical server:

- More efficient hardware utilization

- Multiple applications could run isolated from each other

- Easier recovery and provisioning

Example: A single powerful server could host multiple VMs running different operating systems and applications.

# Example of launching a VM with VirtualBox CLI VBoxManage startvm "Ubuntu Server" --type headless

3. Infrastructure as a Service (2006+)

Cloud providers like AWS offered on-demand virtual resources:

- No physical hardware management

- Pay-per-use model

- Global availability

Example: Amazon EC2 instances could be launched with an API call, eliminating hardware provisioning time.

# Example of launching an EC2 instance with AWS CLI aws ec2 run-instances --image-id ami-0abcdef1234567890 --instance-type t2.micro

4. Platform as a Service (2009+)

Services like Heroku and Cloud Foundry provided managed platforms for applications:

- Focus on code, not infrastructure

- Built-in scaling and high availability

- Simplified deployment workflows

Example: Deploying an application to Heroku required just a git push.

# Deploying to Heroku git push heroku main

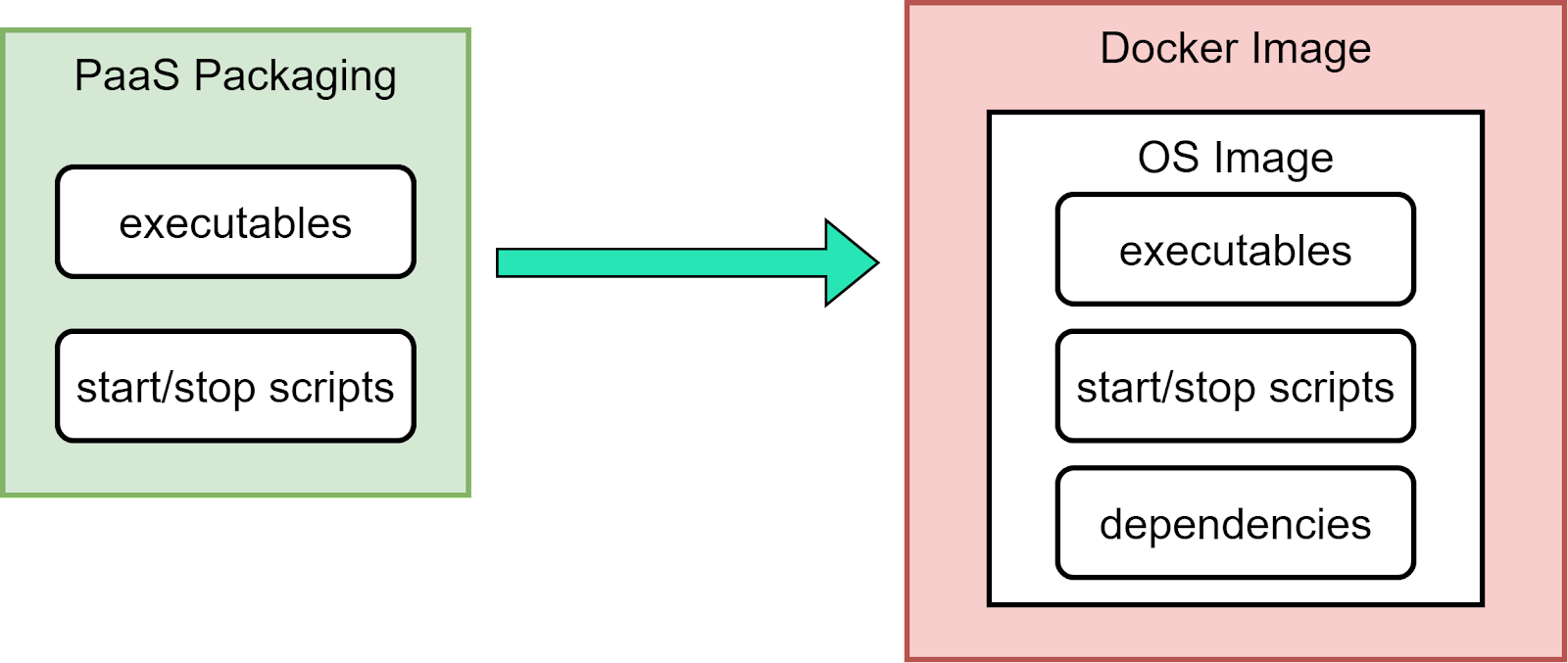

However, PaaS solutions still suffered from environment inconsistencies and dependency challenges.

Docker's Key Innovations

Docker made two critical improvements over previous systems when it launched in 2013:

1. Lightweight Containerization

Containers vs. Virtual Machines

Virtual Machines:

- Emulate hardware to run a complete operating system

- Every VM includes a full OS copy

- Significant resource overhead

- Slow to start (minutes)

Docker Containers:

- Share the host OS kernel

- Use Linux namespaces and cgroups for isolation

- Minimal resource overhead

- Start almost instantly (seconds)

2. Application Packaging

Docker created a standardized way to package applications with all their dependencies:

- Dockerfile - A recipe for creating images with clear build steps

- Image - Immutable package with the application and dependencies

- Registry - Repository for storing and sharing images

- Container - Running instance of an image

This solved the "works on my machine" problem by ensuring consistency across all environments.

The Container Revolution: Docker vs Kubernetes

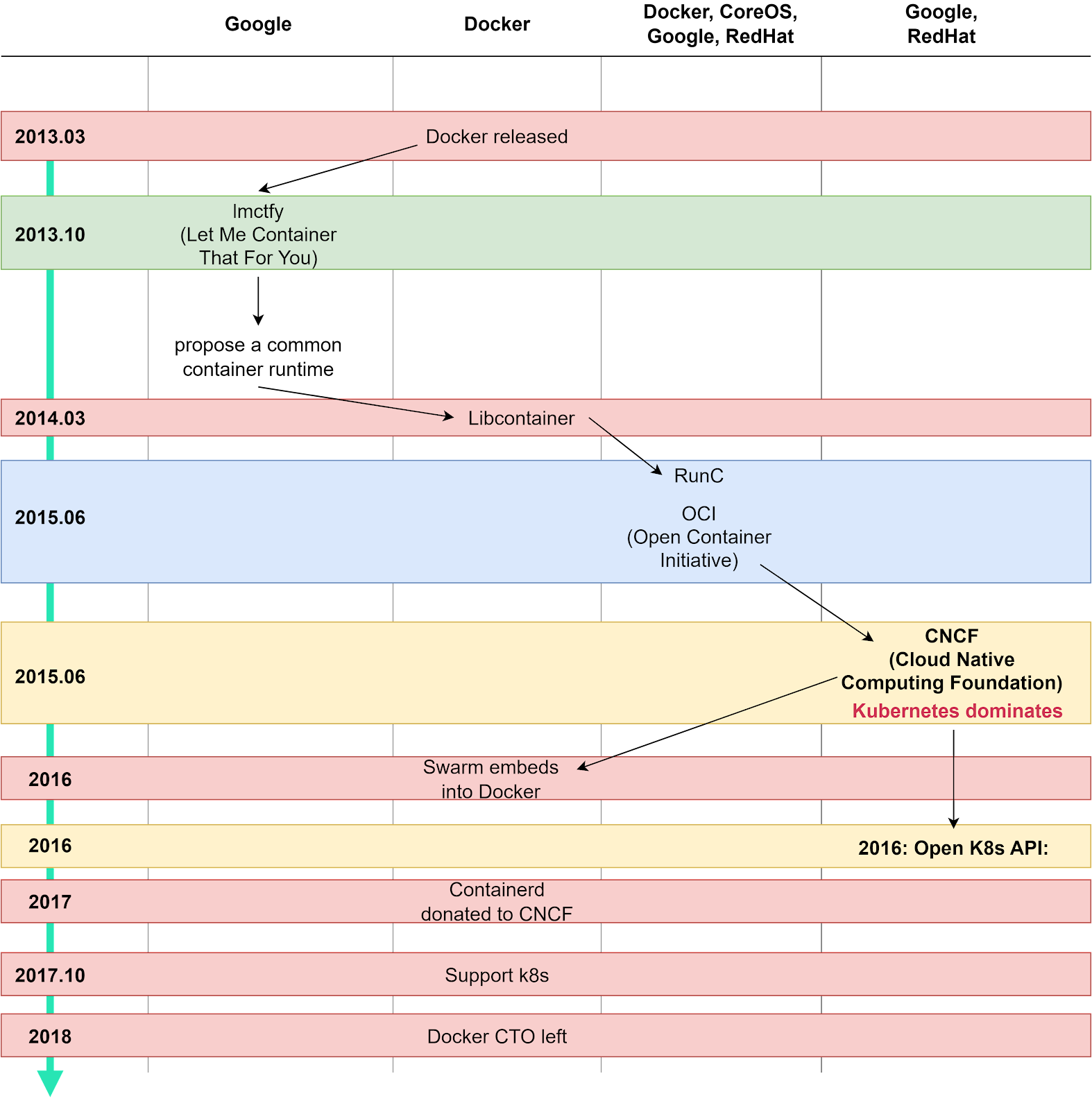

Docker quickly gained popularity, but its path wasn't without competition. As container technology became more important, strategic battles emerged:

Key Milestones:

2013: Docker is released as an open-source project

2014: Google releases lmctfy container technology and proposes collaboration with Docker

2015:

- Formation of the Open Container Initiative (OCI)

- Docker's Libcontainer becomes runC

- Google and RedHat establish the Cloud Native Computing Foundation (CNCF)

2016-2017:

- Kubernetes gains popularity as an orchestration platform

- Docker adds Kubernetes support to Docker Enterprise

2018 onward:

- Kubernetes becomes the dominant container orchestration platform

- Docker remains essential for container image building and local development

The battle between Docker and Kubernetes revealed an important lesson: open ecosystems often outcompete closed platforms. While Docker's orchestration ambitions faltered, its core container technology thrived within the broader cloud-native ecosystem.

Technical Deep Dive: How Docker Works

Images vs. Runtime

Docker's architecture separates static packaging (images) from dynamic execution (runtime):

- Image: Immutable template with application code, dependencies, and configuration

- Container: Running instance of an image with its own isolated environment

This separation ensures consistency while allowing runtime flexibility.

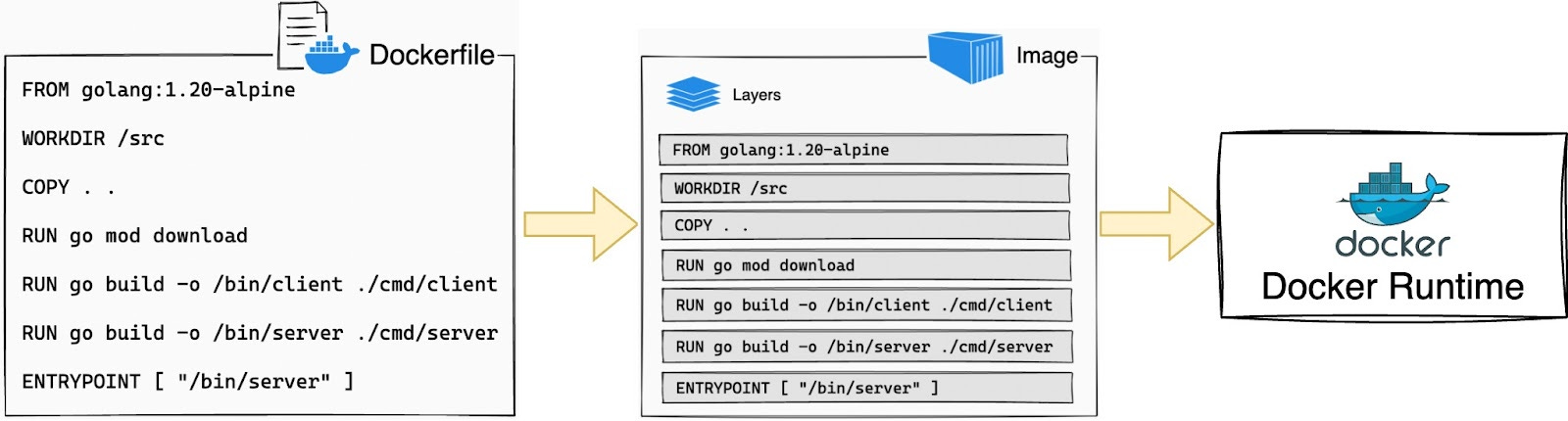

Building with Dockerfiles

A Dockerfile is a script that defines how an image should be built:

# Example Dockerfile for a Node.js application FROM node:14-alpine WORKDIR /app COPY package*.json ./ RUN npm install COPY . . EXPOSE 3000 CMD ["node", "server.js"]

Each instruction in the Dockerfile creates a new layer in the image:

# Building an image from a Dockerfile docker build -t myapp:latest .

The Linux Foundations of Docker

Docker's magic comes from three key Linux kernel features:

1. Namespaces - Process Isolation

Namespaces create isolated views of system resources for processes, including:

- PID namespace: Isolated process IDs

- Network namespace: Separate network interfaces

- Mount namespace: Isolated filesystem view

# Starting a container creates isolated namespaces docker run -ti debian /bin/bash # Inside the container, we only see container processes ps aux # OUTPUT: # USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND # root 1 0.0 0.1 18508 3188 pts/0 Ss 00:00 0:00 /bin/bash

Under the hood, Docker uses the clone() system call with namespace flags:

// Simplified example of namespace creation int pid = clone(main_function, stack_size, CLONE_NEWPID | SIGCHLD, NULL);

2. Control Groups (cgroups) - Resource Limiting

Cgroups restrict how much system resources a container can use:

- CPU time

- Memory

- Disk I/O

- Network bandwidth

# Run a container with memory and CPU limits docker run -ti --memory=512m --cpus=0.5 debian /bin/bash

This is equivalent to configuring cgroups directly:

# Manual cgroup configuration (without Docker) echo 20000 > /sys/fs/cgroup/cpu/container/cpu.cfs_quota_us echo 100000 > /sys/fs/cgroup/cpu/container/cpu.cfs_period_us echo $PID > /sys/fs/cgroup/cpu/container/tasks

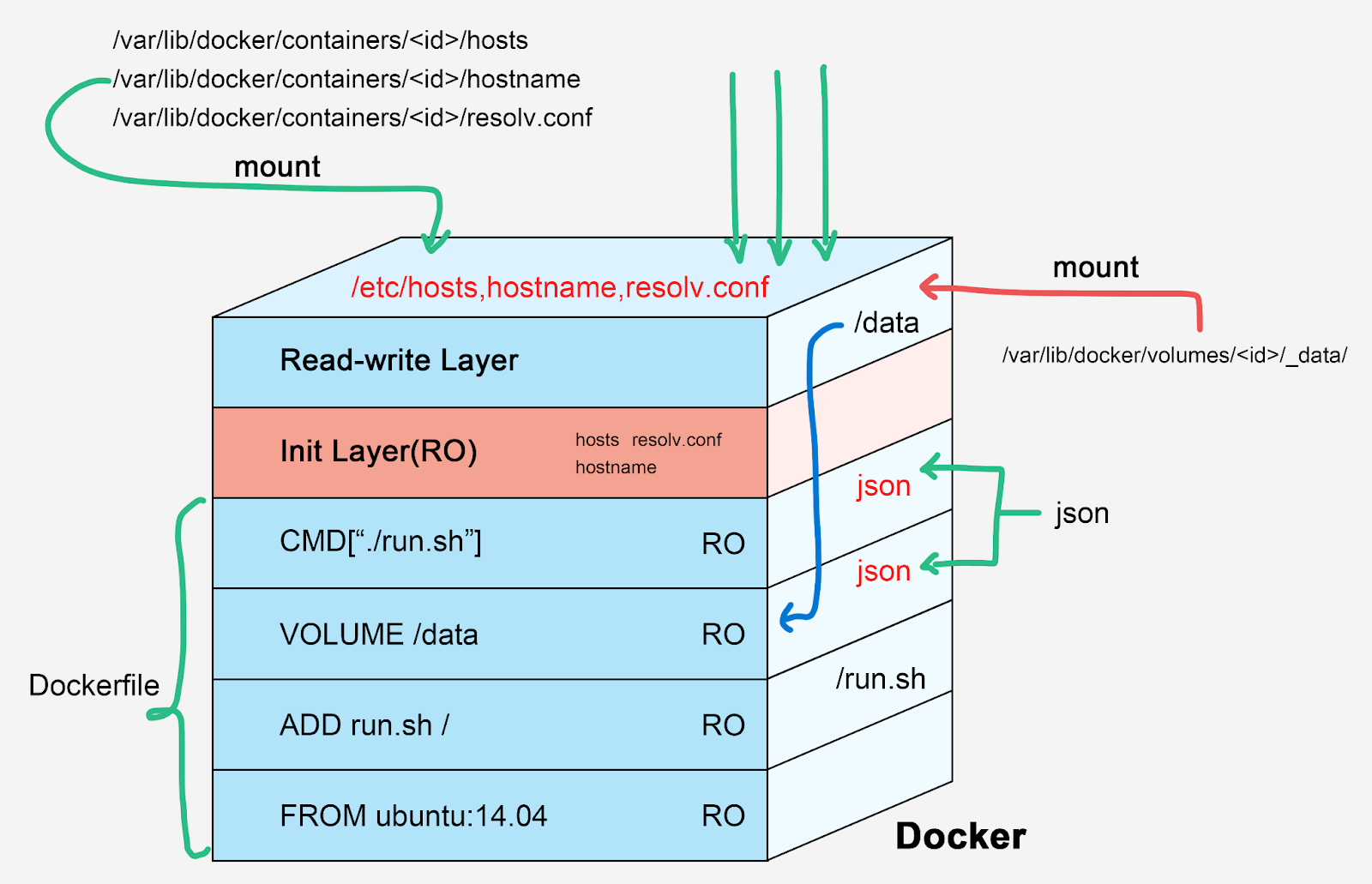

3. Union Filesystem - Layered Images

Docker uses a layered filesystem where each Dockerfile instruction creates a new layer:

- Base layers are read-only and shared between containers

- A thin writable layer is added for each running container

- Changes are written to the top layer, preserving the immutable image below

This architecture enables:

- Efficient storage (shared base layers)

- Quick container startup

- Immutable infrastructure patterns

Docker in Practice

Let's see how these concepts work together with a simple web application:

# Dockerfile for a Python web application FROM python:3.9-slim WORKDIR /app COPY requirements.txt . RUN pip install --no-cache-dir -r requirements.txt COPY . . EXPOSE 5000 CMD ["python", "app.py"]

Building and running the application:

# Build the image docker build -t webapp:latest . # Run a container from the image docker run -d -p 8080:5000 --name mywebapp webapp:latest # Inspect the running container docker ps docker logs mywebapp # Stop and remove the container docker stop mywebapp docker rm mywebapp

Docker Today and Beyond

While Docker revolutionized application packaging, the container landscape has evolved:

Current State of Docker Technologies

- Docker Engine: Still widely used for local development

- Docker Compose: Popular for multi-container development environments

- Dockerfile: The standard format for defining container images

- Docker Hub: Major public registry for container images

Broader Container Ecosystem

- Containerd and CRI-O: Lower-level container runtimes used in Kubernetes

- Kubernetes: De facto standard for container orchestration

- Image Registry Options: Docker Hub, GitHub Container Registry, Amazon ECR, etc.

- BuildKit, Buildah, Kaniko: Alternative image building tools

Example Docker Compose File

# docker-compose.yml for a web application with a database version: '3' services: web: build: . ports: - "8000:5000" depends_on: - db environment: DATABASE_URL: postgresql://postgres:example@db:5432/mydb db: image: postgres:13 volumes: - postgres_data:/var/lib/postgresql/data environment: POSTGRES_PASSWORD: example POSTGRES_DB: mydb volumes: postgres_data:

Conclusion

Docker transformed application development by solving the persistent "works on my machine" problem through lightweight containerization and standardized packaging. It leveraged Linux kernel features (namespaces, cgroups, and union filesystems) to create portable, isolated environments that work consistently across development, testing, and production.

While Kubernetes has become the dominant orchestration platform, Docker's core concepts and tooling remain fundamental to cloud-native development. The separation of immutable images from runtime containers enables reproducible deployments and has become the foundation of modern software delivery.

As container technology continues to evolve, Docker's legacy lives on in the standardized container format it pioneered, which has become the universal packaging method for cloud applications.

References

- Docker Documentation: https://docs.docker.com/

- Open Container Initiative: https://opencontainers.org/

- Cloud Native Computing Foundation: https://www.cncf.io/

- Kubernetes Documentation: https://kubernetes.io/docs/home/

- Linux Kernel Namespaces: https://man7.org/linux/man-pages/man7/namespaces.7.html

- Linux Control Groups: https://man7.org/linux/man-pages/man7/cgroups.7.html